Scraping Tweets with Python - Simple Steps on How To Do It

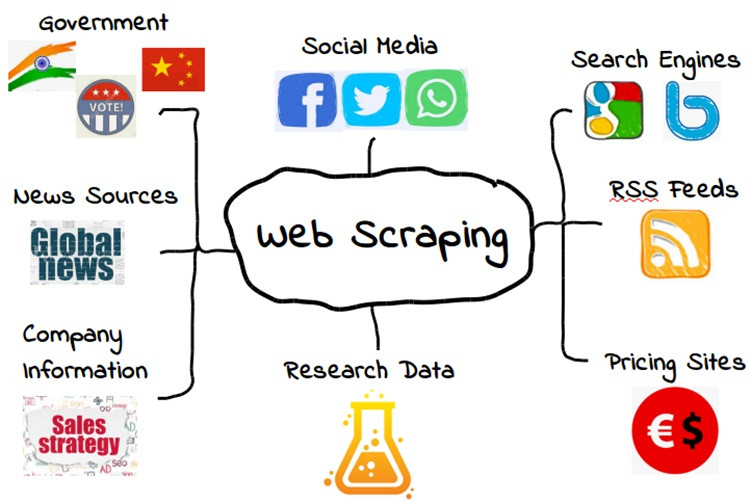

Social media platforms have gathered people from different countries across the globe in one venue. These platforms were initially mainly used for communication but, now, it is also a place for entertainment, information sharing, research, and money-making. It is where people give their opinions on certain topics. Thus, these platforms are usually considered for data collection.

One of the social media giants across the globe is Twitter. It is where many researchers turn to for the prediction of the stock market. Its data also help analyze moods at a national level as it is a huge psychological database where you can pull every "Tweet", short messages, about a certain topic. How?

This is where Python Web Scraping comes in...

For developers, one of the most useful tips to get the work done efficiently and effectively is to use the right tools. For data collection and web scraping automation, Python is undeniably one of the few best choices.

Python coding language is the easiest to use most especially for beginners in the field of web development. It is a clear and straight forward coding language and it does not include excessive non-alphabetical characters. Thus even newbies in web development can easily understand and learn the data it collected.

Python also offers large libraries including Pandas, NumPy, and Matlpotlib. You do not need to worry if the data sets you want to manipulate are from across different libraries. It does not also require developers to categorize or define the types of data for variables which may take a lot of time and its syntax is very easy to understand. It is just like reading English.

Furthermore, with regards to a crucial decision for developers on choosing scopes and blocks in the code, you won't have a hard time as Python syntax uses indentions that can assist in the decision-making.

By scraping with Python, you can write simple code even on complicated tasks. When it comes to coding languages that are known to many people, Python is one of them thus a lot of developers can help clear out your confusions when questions come along the way while working.

How to Use Python Web Scraping:

The permission to scrape data from your choice of website is needed and it is asked after the code is written and ran. If the website of your choice approves the request for data collection, the server will send the data which will allow you to read the HTML and XML page, analyze it, and find the data you need.

Step 1 - Choose the URL of the website from which you want to collect data.

Step 2 - Read the page and find the data you want to scrape from the website of your choice.

Step 3 - Write the code.

Step 4 - Run the code to extract the data.

Step 5 - Keep the data in the necessary format.

Some sites may allow you to collect their data through web scraping but there may be others that will block you instead. To find out if you have been blocked, you may check the website's “robot.txt” file. To check on it, simply add “/robots.txt” to the URL of the website you wish to collect data from.

Which Python Libraries you can use for web scraping?

Python Web Scraping tool offers huge libraries that allows it to be used in different purposes. With regards to data collection purpose, the following libraries can help you do the job best:

- Selenium - This web testing library can help you automate your browser activity

- Beautiful Soup - This is the library for parsing HTML and XML documents and it creates "parse trees" so it would be easier for you to collect the data.

- Pandas - This is the library you can use in manipulating and analysing data. It will store the data you collected in the format of your choice.

- # Importing SNScrape Library's Twitter Module

- import snscrape.modules.twitter as sntwitter

- import pandas as pd

- # Term to Search

- search = "webscraping"

- #List of Tweets

- tweets = []

- #Tweets Limit to Extract

- tweetLimit = 5

- for tweet in sntwitter.TwitterSearchScraper(search).get_items():

- if len(tweets) == tweetLimit:

- break

- else:

- tweets.append([tweet.date, tweet.username, tweet.content])

- tweetsDF = pd.DataFrame(tweets, columns=['Date', 'User', 'Tweet'])

- print(tweetsDF)

Inspect the website of your choice...

It is important to inspect the site you want to collect data from to avoid the information that will not be useful to you. To do this, first locate the links to the files you want to collect. There are several layers of "tags" and code and inspecting the sit can help you determine where the data you want to gather can be found. How to inspect the website? Simply right-click on the site and click "Inspect". A box containing raw code will be shown then.